Publications

featured works

preprint

2025

- preprint

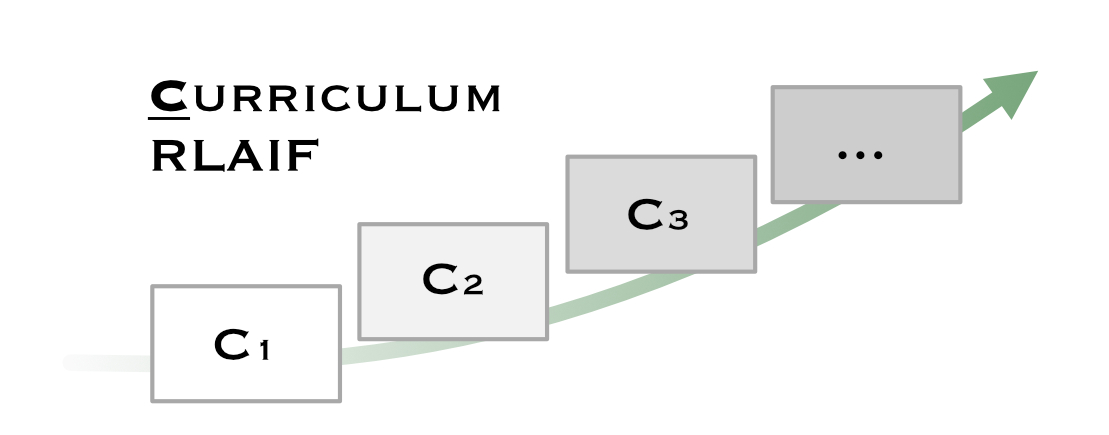

Curriculum-RLAIF: Curriculum Alignment with Reinforcement Learning from AI FeedbackMay 2025

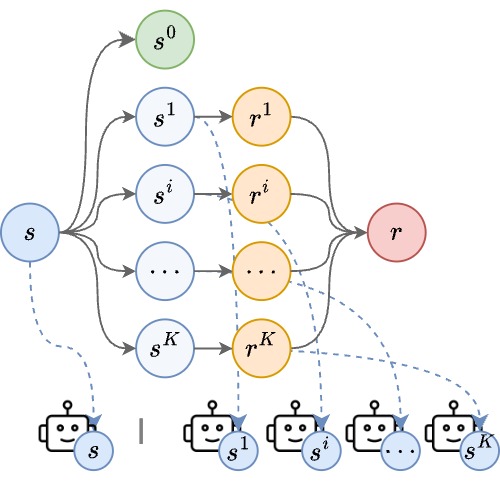

Curriculum-RLAIF: Curriculum Alignment with Reinforcement Learning from AI FeedbackMay 2025Reward models trained with conventional Reinforcement Learning from AI Feedback (RLAIF) methods suffer from limited generalizability, which hinders the alignment performance of the policy model during reinforcement learning (RL). This challenge stems from various issues, including distribution shift, preference label noise, and mismatches between overly challenging samples and model capacity. In this paper, we attempt to enhance the generalizability of reward models through a data-centric approach, driven by the insight that these issues are inherently intertwined from the perspective of data difficulty. To address this, we propose a novel framework, {}textit{Curriculum-RLAIF} which constructs preference pairs with varying difficulty levels and produces a curriculum that progressively incorporates preference pairs of increasing difficulty for reward model training. Our experimental results suggest that reward models trained with Curriculum-RLAIF achieve improved generalizability, significantly increasing the alignment performance of the policy model by a large margin without incurring additional inference costs compared to various non-curriculum baselines. Detailed analysis and comparisons with alternative approaches, including data selection via external pretrained reward models or internal self-selection mechanisms, as well as other curriculum strategies, further demonstrate the superiority of our approach in terms of simplicity, efficiency, and effectiveness.

@preprint{Li25CurriculumRLAIFCurriculum, title = {Curriculum-{{RLAIF}}: Curriculum Alignment with Reinforcement Learning from {{AI}} Feedback}, shorttitle = {Curriculum-{{RLAIF}}}, author = {Li, Mengdi and Lin, Jiaye and Zhao, Xufeng and Lu, Wenhao and Zhao, Peilin and Wermter, Stefan and Wang, Di}, year = {2025}, month = may, number = {arXiv:2505.20075}, eprint = {2505.20075}, primaryclass = {cs}, publisher = {arXiv}, doi = {10.48550/arXiv.2505.20075}, url = {http://arxiv.org/abs/2505.20075}, urldate = {2025-05-27}, archiveprefix = {arXiv}, langid = {english}, } - preprint

LLM+MAP: Bimanual Robot Task Planning Using Large Language Models and Planning Domain Definition LanguageMar 2025

LLM+MAP: Bimanual Robot Task Planning Using Large Language Models and Planning Domain Definition LanguageMar 2025Bimanual robotic manipulation provides significant versatility, but also presents an inherent challenge due to the complexity involved in the spatial and temporal coordination between two hands. Existing works predominantly focus on attaining human-level manipulation skills for robotic hands, yet little attention has been paid to task planning on long-horizon timescales. With their outstanding in-context learning and zero-shot generation abilities, Large Language Models (LLMs) have been applied and grounded in diverse robotic embodiments to facilitate task planning. However, LLMs still suffer from errors in long-horizon reasoning and from hallucinations in complex robotic tasks, lacking a guarantee of logical correctness when generating the plan. Previous works, such as LLM+P, extended LLMs with symbolic planners. However, none have been successfully applied to bimanual robots. New challenges inevitably arise in bimanual manipulation, necessitating not only effective task decomposition but also efficient task allocation. To address these challenges, this paper introduces LLM+MAP, a bimanual planning framework that integrates LLM reasoning and multi-agent planning, automating effective and efficient bimanual task planning. We conduct simulated experiments on various long-horizon manipulation tasks of differing complexity. Our method is built using GPT-4o as the backend, and we compare its performance against plans generated directly by LLMs, including GPT-4o, V3 and also recent strong reasoning models o1 and R1. By analyzing metrics such as planning time, success rate, group debits, and planning-step reduction rate, we demonstrate the superior performance of LLM+MAP, while also providing insights into robotic reasoning. Code is available at https://github.com/Kchu/LLM-MAP.

@preprint{Chu25LLM+MAPBimanual, title = {{{LLM}}+{{MAP}}: Bimanual Robot Task Planning Using Large Language Models and Planning Domain Definition Language}, shorttitle = {Llm+map}, author = {Chu, Kun and Zhao, Xufeng and Weber, Cornelius and Wermter, Stefan}, year = {2025}, month = mar, number = {arXiv:2503.17309}, eprint = {2503.17309}, primaryclass = {cs}, publisher = {arXiv}, doi = {10.48550/arXiv.2503.17309}, url = {http://arxiv.org/abs/2503.17309}, urldate = {2025-03-25}, archiveprefix = {arXiv}, langid = {english}, journaltitle = {}, }

Conference Articles

2025

- workshop

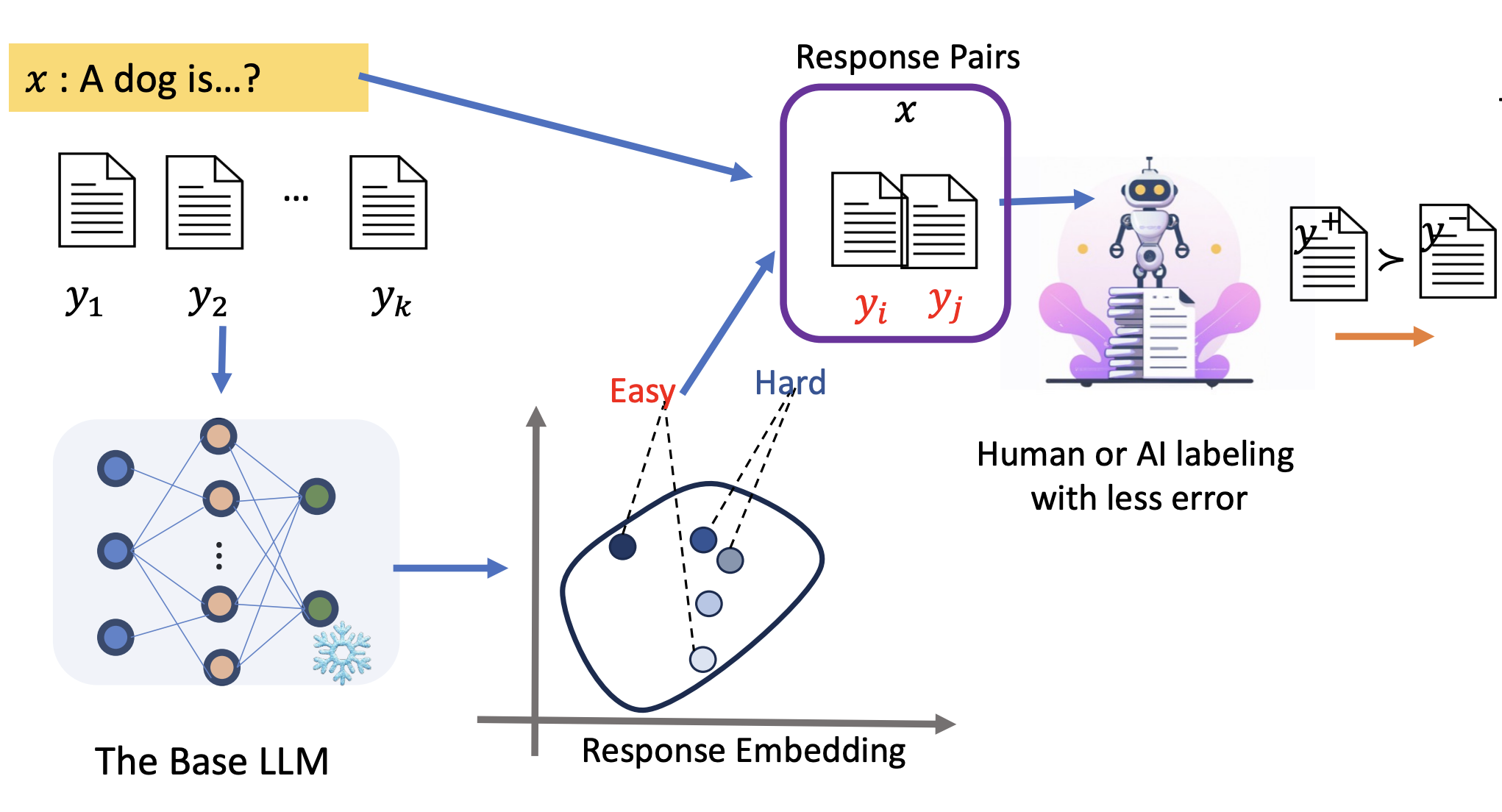

REAL: Response Embedding-based Alignment for LLMsIn IJCAI 2025 workshop Causal Learning for Recommendation Systems, Jun 2025

REAL: Response Embedding-based Alignment for LLMsIn IJCAI 2025 workshop Causal Learning for Recommendation Systems, Jun 2025Aligning large language models (LLMs) to human preferences is a crucial step in building helpful and safe AI tools, which usually involve training on supervised datasets. Popular algorithms such as Direct Preference Optimization rely on pairs of AI-generated responses ranked according to human feedback. The labeling process is the most labor-intensive and costly part of the alignment pipeline, and improving its efficiency would have a meaningful impact on AI development. We propose a strategy for sampling a high-quality training dataset that focuses on acquiring the most informative response pairs for labeling out of a set of AI-generated responses. Experimental results on synthetic HH-RLHF benchmarks indicate that choosing dissimilar response pairs enhances the direct alignment of LLMs while reducing inherited labeling errors. We also applied our method to the real-world dataset SHP2, selecting optimal pairs from multiple responses. The model aligned on dissimilar response pairs obtained the best win rate on the dialogue task. Our findings suggest that focusing on less similar pairs can improve the efficiency of LLM alignment, saving up to 65% of annotators’ work.

@inproceedings{Zhang24REALResponse, title = {{{REAL}}: {{Response Embedding-based Alignment}} for {{LLMs}}}, shorttitle = {{{REAL}}}, author = {Zhang, Honggen and Zhao, Xufeng and Molybog, Igor and Zhang, June}, year = {2025}, month = jun, number = {arXiv:2409.17169}, eprint = {2409.17169}, primaryclass = {cs}, publisher = {arXiv}, doi = {10.48550/arXiv.2409.17169}, url = {http://arxiv.org/abs/2409.17169}, urldate = {2024-09-27}, archiveprefix = {arXiv}, pages = {2409.17169}, booktitle = {IJCAI 2025 workshop Causal Learning for Recommendation Systems}, }

2024

- CoRL 2024 / ICRA@40

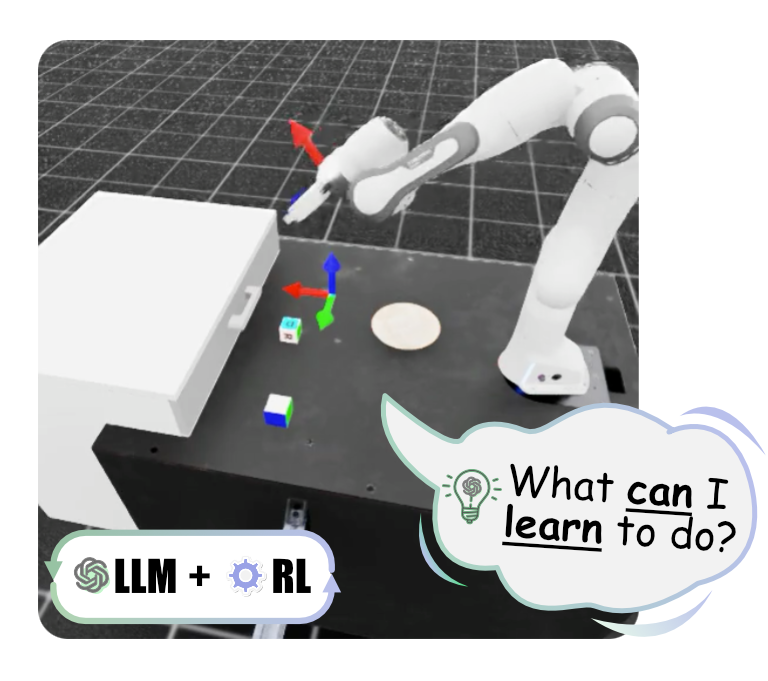

Agentic Skill DiscoveryXufeng Zhao, Cornelius Weber, and Stefan WermterIn

Agentic Skill DiscoveryXufeng Zhao, Cornelius Weber, and Stefan WermterIn

• CoRL 2024 Workshop on Language and Robot Learning: Language as an Interface

• 40th Anniversary of the IEEE International Conference on Robotics and Automation (ICRA@40), Nov 2024Language-conditioned robotic skills make it possible to apply the high-level reasoning of Large Language Models (LLMs) to low-level robotic control. A remaining challenge is to acquire a diverse set of fundamental skills. Existing approaches either manually decompose a complex task into atomic robotic actions in a top-down fashion, or bootstrap as many combinations as possible in a bottom-up fashion to cover a wider range of task possibilities. These decompositions or combinations, however, require an initial skill library. For example, a "grasping" capability can never emerge from a skill library containing only diverse "pushing" skills. Existing skill discovery techniques with reinforcement learning acquire skills by an exhaustive exploration but often yield non-meaningful behaviors. In this study, we introduce a novel framework for skill discovery that is entirely driven by LLMs. The framework begins with an LLM generating task proposals based on the provided scene description and the robot’s configurations, aiming to incrementally acquire new skills upon task completion. For each proposed task, a series of reinforcement learning processes are initiated, utilizing reward and success determination functions sampled by the LLM to develop the corresponding policy. The reliability and trustworthiness of learned behaviors are further ensured by an independent vision-language model. We show that starting with zero skill, the ASD skill library emerges and expands to more and more meaningful and reliable skills, enabling the robot to efficiently further propose and complete advanced tasks. The project page can be found at: https://agentic-skill-discovery.github.io.

@inproceedings{Zhao24AgenticSkill, title = {Agentic {{Skill Discovery}}}, author = {Zhao, Xufeng and Weber, Cornelius and Wermter, Stefan}, year = {2024}, month = nov, booktitle = { <br/> • CoRL 2024 Workshop on Language and Robot Learning: Language as an Interface <br/> • 40th Anniversary of the IEEE International Conference on Robotics and Automation (ICRA@40)}, url = {https://agentic-skill-discovery.github.io/}, featured = {true}, } - Details Make a Difference: Object State-Sensitive Neurorobotic Task PlanningIn The 33rd International Conference on Artificial Neural Networks (ICANN 2024), Sep 2024

The state of an object reflects its current status or condition and is important for a robot’s task planning and manipulation. However, detecting an object’s state and generating a state-sensitive plan for robots is challenging. Recently, pre-trained Large Language Models (LLMs) and Vision-Language Models (VLMs) have shown impressive capabilities in generating plans. However, to the best of our knowledge, there is hardly any investigation on whether LLMs or VLMs can also generate object state-sensitive plans. To study this, we introduce an Object State-Sensitive Agent (OSSA), a task-planning agent empowered by pre-trained neural networks. We propose two methods for OSSA: (i) a modular model consisting of a pre-trained vision processing module (dense captioning model, DCM) and a natural language processing model (LLM), and (ii) a monolithic model consisting only of a VLM. To quantitatively evaluate the performances of the two methods, we use tabletop scenarios where the task is to clear the table. We contribute a multimodal benchmark dataset that takes object states into consideration. Our results show that both methods can be used for object state-sensitive tasks, but the monolithic approach outperforms the modular approach. The code for OSSA is available at https://github.com/Xiao-wen-Sun/OSSA

@inproceedings{Sun24DetailsMake, title = {Details {{Make}} a {{Difference}}: {{Object State-Sensitive Neurorobotic Task Planning}}}, shorttitle = {Details {{Make}} a {{Difference}}}, author = {Sun, Xiaowen and Zhao, Xufeng and Lee, Jae Hee and Lu, Wenhao and Kerzel, Matthias and Wermter, Stefan}, year = {2024}, month = sep, primaryclass = {cs}, publisher = {arXiv}, doi = {10.48550/arXiv.2406.09988}, url = {http://arxiv.org/abs/2406.09988}, booktitle = {The 33rd International Conference on Artificial Neural Networks (ICANN 2024)}, place = {Switzerland}, } - Large Language Models for Orchestrating Bimanual RobotsIn 2024 IEEE-RAS International Conference on Humanoid Robots, Nov 2024

Although there has been rapid progress in endowing robots with the ability to solve complex manipulation tasks, generating control policies for bimanual robots to solve tasks involving two hands is still challenging because of the difficulties in effective temporal and spatial coordination. With emergent abilities in terms of step-by-step reasoning and in-context learning, Large Language Models (LLMs) have taken control of a variety of robotic tasks. However, the nature of language communication via a single sequence of discrete symbols makes LLM-based coordination in continuous space a particular challenge for bimanual tasks. To tackle this challenge for the first time by an LLM, we present LAnguage-model-based Bimanual ORchestration (LABOR), an agent utilizing an LLM to analyze task configurations and devise coordination control policies for addressing long-horizon bimanual tasks. In the simulated environment, the LABOR agent is evaluated through several everyday tasks on the NICOL humanoid robot. Reported success rates indicate that overall coordination efficiency is close to optimal performance, while the analysis of failure causes, classified into spatial and temporal coordination and skill selection, shows that these vary over tasks. The project website can be found at https://labor-agent.github.io/.

@inproceedings{Chu24LargeLanguage, title = {Large Language Models for Orchestrating Bimanual Robots}, author = {Chu, Kun and Zhao, Xufeng and Weber, Cornelius and Li, Mengdi and Lu, Wenhao and Wermter, Stefan}, year = {2024}, month = nov, booktitle = {2024 IEEE-RAS International Conference on Humanoid Robots}, url = {https://labor-agent.github.io/}, primaryclass = {cs.RO}, archiveprefix = {arxiv}, pages = {2404.02018}, } - Enhancing Zero-Shot Chain-of-Thought Reasoning in Large Language Models through LogicIn 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), May 2024

Recent advancements in large language models have showcased their remarkable generalizability across various domains. However, their reasoning abilities still have significant room for improvement, especially when confronted with scenarios requiring multi-step reasoning. Although large language models possess extensive knowledge, their reasoning often fails to effectively utilize this knowledge to establish a coherent thinking paradigm. These models sometimes show hallucinations as their reasoning procedures are unconstrained by logical principles. Aiming at improving the zero-shot chain-of-thought reasoning ability of large language models, we propose LoT (Logical Thoughts), a self-improvement prompting framework that leverages principles rooted in symbolic logic, particularly Reductio ad Absurdum, to systematically verify and rectify the reasoning processes step by step. Experimental evaluations conducted on language tasks in diverse domains, including arithmetic, commonsense, symbolic, causal inference, and social problems, demonstrate the efficacy of enhanced reasoning by logic.

@inproceedings{Zhao23EnhancingZeroShot, title = {Enhancing {{Zero-Shot Chain-of-Thought Reasoning}} in {{Large Language Models}} through {{Logic}}}, author = {Zhao, Xufeng and Li, Mengdi and Lu, Wenhao and Weber, Cornelius and Lee, Jae Hee and Chu, Kun and Wermter, Stefan}, booktitle = {2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024)}, place = {Turin, Italy}, year = {2024}, month = may, keywords = {Large Language Models, Chain-of-Thought, Reasoning, Logic, Reductio ad Absurdum}, featured = {true}, url = {https://arxiv.org/abs/2309.13339}, } - Causal State Distillation for Explainable Reinforcement LearningIn 3rd Conference on Causal Learning and Reasoning (CLeaR 2024), Apr 2024

Reinforcement learning (RL) is a powerful technique for training intelligent agents, but understanding why these agents make specific decisions can be quite challenging. This lack of transparency in RL models has been a long-standing problem, making it difficult for users to grasp the reasons behind an agent’s behaviour. Various approaches have been explored to address this problem, with one promising avenue being reward decomposition (RD). RD is appealing as it sidesteps some of the concerns associated with other methods that attempt to rationalize an agent’s behaviour in a post-hoc manner. RD works by exposing various facets of the rewards that contribute to the agent’s objectives during training. However, RD alone has limitations as it primarily offers insights based on sub-rewards and does not delve into the intricate cause-and-effect relationships that occur within an RL agent’s neural model. In this paper, we present an extension of RD that goes beyond sub-rewards to provide more informative explanations. Our approach is centred on a causal learning framework that leverages information-theoretic measures for explanation objectives that encourage three crucial properties of causal factors: causal sufficiency, sparseness, and orthogonality. These properties help us distill the cause-and-effect relationships between the agent’s states and actions or rewards, allowing for a deeper understanding of its decision-making processes. Our framework is designed to generate local explanations and can be applied to a wide range of RL tasks with multiple reward channels. Through a series of experiments, we demonstrate that our approach offers more meaningful and insightful explanations for the agent’s action selections.

@inproceedings{Lu23CausalState, title = {Causal {{State Distillation}} for {{Explainable Reinforcement Learning}}}, author = {Lu, Wenhao and Zhao, Xufeng and Fryen, Thilo and Lee, Jae Hee and Li, Mengdi and Magg, Sven and Wermter, Stefan}, booktitle = {3rd Conference on Causal Learning and Reasoning (CLeaR 2024)}, place = {Los Angeles, California, USA}, year = {2024}, month = apr, url = {https://arxiv.org/abs/2401.00104}, keywords = {Computer Science - Artificial Intelligence,Computer Science - Machine Learning,Statistics - Methodology}, }

2023

- CoRL 2023 - CRL

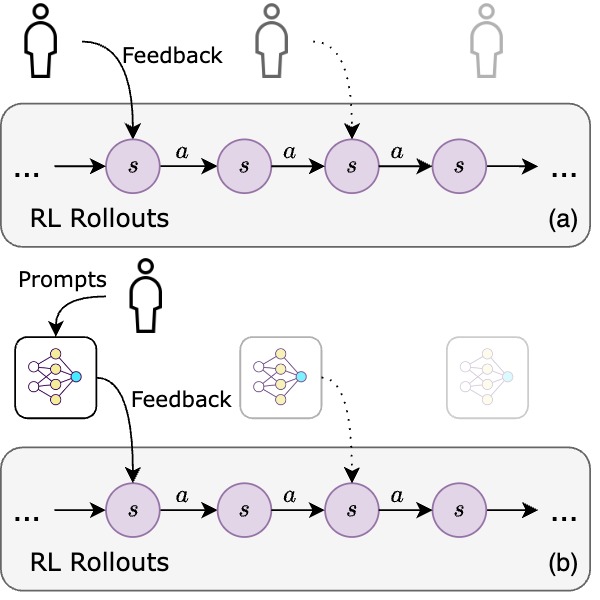

Accelerating Reinforcement Learning of Robotic Manipulations via Feedback from Large Language ModelsIn 7th Conference on Robot Learning (CoRL 2023) Workshop, Nov 2023

Accelerating Reinforcement Learning of Robotic Manipulations via Feedback from Large Language ModelsIn 7th Conference on Robot Learning (CoRL 2023) Workshop, Nov 2023Reinforcement Learning (RL) plays an important role in the robotic manipulation domain since it allows self-learning from trial-and-error interactions with the environment. Still, sample efficiency and reward specification seriously limit its potential. One possible solution involves learning from expert guidance. However, obtaining a human expert is impractical due to the high cost of supervising an RL agent, and developing an automatic supervisor is a challenging endeavor. Large Language Models (LLMs) demonstrate remarkable abilities to provide human-like feedback on user inputs in natural language. Nevertheless, they are not designed to directly control low-level robotic motions, as their pretraining is based on vast internet data rather than specific robotics data. In this paper, we introduce the Lafite-RL (Language agent feedback interactive Reinforcement Learning) framework, which enables RL agents to learn robotic tasks efficiently by taking advantage of LLMs’ timely feedback. Our experiments conducted on RLBench tasks illustrate that, with simple prompt design in natural language, the Lafite-RL agent exhibits improved learning capabilities when guided by an LLM. It outperforms the baseline in terms of both learning efficiency and success rate, underscoring the efficacy of the rewards provided by an LLM.

@inproceedings{chu2023accelerating, title = {Accelerating Reinforcement Learning of Robotic Manipulations via Feedback from Large Language Models}, url = {https://openreview.net/group?id=robot-learning.org/CoRL/2023/Workshop/CRL_WS#tab-accept-oral}, author = {Chu, Kun and Zhao, Xufeng and Weber, Cornelius and Li, Mengdi and Wermter, Stefan}, booktitle = {7th Conference on Robot Learning (CoRL 2023) Workshop}, place = {Atlanta, Georgia USA}, year = {2023}, month = nov, } - Chat with the Environment: Interactive Multimodal Perception Using Large Language ModelsIn 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2023

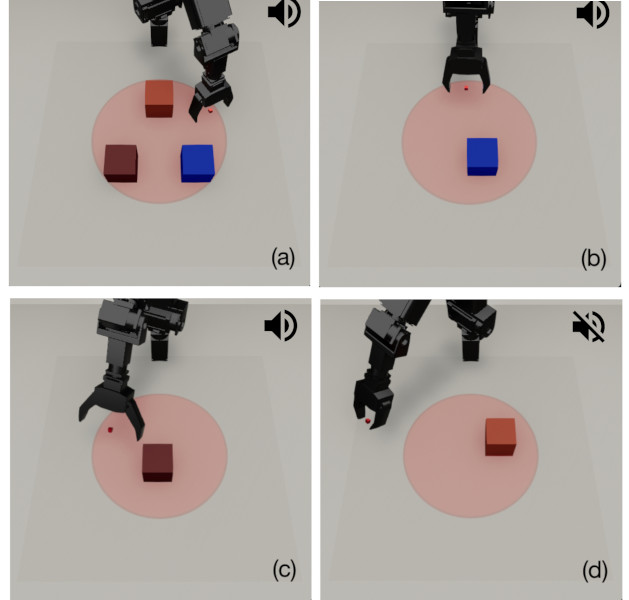

Programming robot behavior in a complex world faces challenges on multiple levels, from dextrous low-level skills to high-level planning and reasoning. Recent pre-trained Large Language Models (LLMs) have shown remarkable reasoning ability in few-shot robotic planning. However, it remains challenging to ground LLMs in multimodal sensory input and continuous action output, while enabling a robot to interact with its environment and acquire novel information as its policies unfold. We develop a robot interaction scenario with a partially observable state, which necessitates a robot to decide on a range of epistemic actions in order to sample sensory information among multiple modalities, before being able to execute the task correctly. Matcha (Multimodal environment chatting) agent, an interactive perception framework, is therefore proposed with an LLM as its backbone, whose ability is exploited to instruct epistemic actions and to reason over the resulting multimodal sensations (vision, sound, haptics, proprioception), as well as to plan an entire task execution based on the interactively acquired information. Our study demonstrates that LLMs can provide high-level planning and reasoning skills and control interactive robot behavior in a multimodal environment, while multimodal modules with the context of the environmental state help ground the LLMs and extend their processing ability. The project website can be found at https://matcha-agent.github.io/.

@inproceedings{Zhao23ChatEnvironment, title = {Chat with the {{Environment}}: {{Interactive Multimodal Perception}} Using {{Large Language Models}}}, url = {https://ieeexplore.ieee.org/abstract/document/10342363/}, shorttitle = {Chat with the {{Environment}}}, booktitle = {2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages = {3590--3596}, organization = {IEEE}, place = {Detroit, USA}, author = {Zhao, Xufeng and Li, Mengdi and Weber, Cornelius and Hafez, Muhammad Burhan and Wermter, Stefan}, year = {2023}, month = oct, featured = {true}, } - Internally Rewarded Reinforcement LearningIn Proceedings of the 40th International Conference on Machine Learning (ICML 2023), Jul 2023

We study a class of reinforcement learning problems where the reward signals for policy learning are generated by a discriminator that is dependent on and jointly optimized with the policy. This interdependence between the policy and the discriminator leads to an unstable learning process because reward signals from an immature discriminator are noisy and impede policy learning, and conversely, an under-optimized policy impedes discriminator learning. We call this learning setting Internally Rewarded Reinforcement Learning (IRRL) as the reward is not provided directly by the environment but internally by the discriminator. In this paper, we formally formulate IRRL and present a class of problems that belong to IRRL. We theoretically derive and empirically analyze the effect of the reward function in IRRL and based on these analyses propose the clipped linear reward function. Experimental results show that the proposed reward function can consistently stabilize the training process by reducing the impact of reward noise, which leads to faster convergence and higher performance compared with baselines in diverse tasks.

@inproceedings{Li*23InternallyRewarded, title = {Internally {{Rewarded Reinforcement Learning}}}, url = {https://proceedings.mlr.press/v202/li23ax.html}, shorttitle = {{{ICML}}}, booktitle = {Proceedings of the 40th International Conference on Machine Learning ({{ICML 2023}})}, place = {Honolulu, Hawaii USA}, author = {Li, Mengdi and Zhao, Xufeng and Lee, Jae Hee and Weber, Cornelius and Wermter, Stefan}, year = {2023}, month = jul, volume = {202}, pages = {20556--20574}, editor = {Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan}, featured = {true}, } - A Closer Look at Reward Decomposition for High-Level Robotic ExplanationsIn IEEE International Conference on Development and Learning (ICDL 2023), Nov 2023

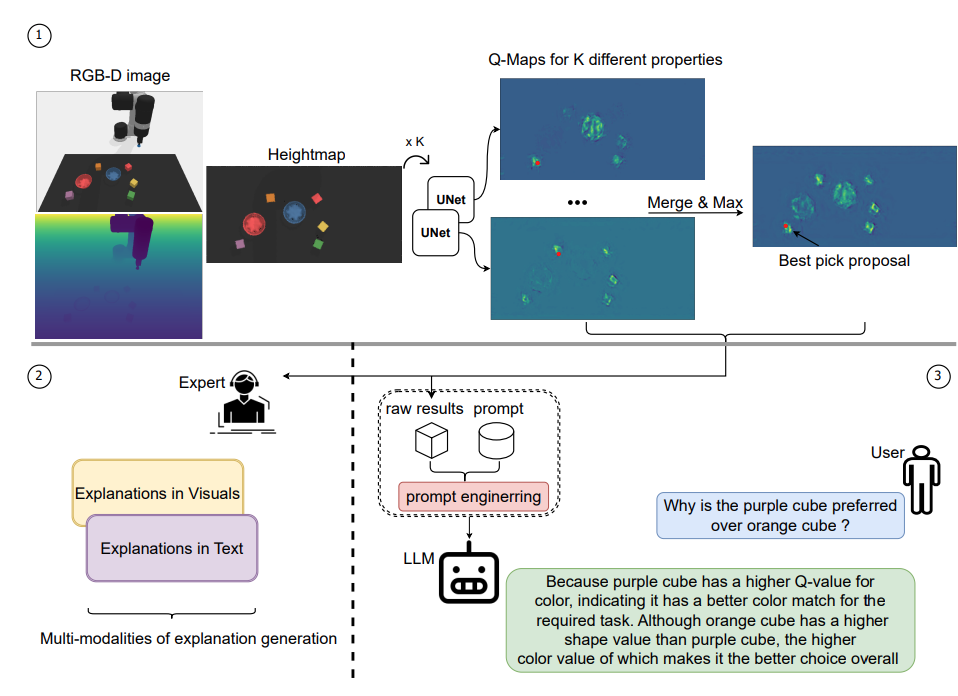

Explaining the behavior of intelligent agents such as robots to humans is challenging due to their incomprehensible proprioceptive states, variational intermediate goals, and resultant unpredictability. Moreover, one-step explanations for reinforcement learning agents can be ambiguous as they fail to account for the agent’s future behavior at each transition, adding to the complexity of explaining robot actions. By leveraging abstracted actions that map to task-specific primitives, we avoid explanations on the movement level. Our proposed framework combines reward decomposition (RD) with abstracted action spaces into an explainable learning framework, allowing for non-ambiguous and high-level explanations based on object properties in the task. We demonstrate the effectiveness of our framework through quantitative and qualitative analysis of two robot scenarios, showcasing visual and textual explanations, from output artifacts of RD explanation, that are easy for humans to comprehend. Additionally, we demonstrate the versatility of integrating these artifacts with large language models for reasoning and interactive querying.

@inproceedings{Lu23CloserLook, title = {A {{Closer Look}} at {{Reward Decomposition}} for {{High-Level Robotic Explanations}}}, url = {https://ieeexplore.ieee.org/abstract/document/10364407/}, author = {Lu, Wenhao and Zhao, Xufeng and Magg, Sven and Gromniak, Martin and Wermter, Stefan}, booktitle = {IEEE International Conference on Development and Learning (ICDL 2023)}, place = {Macau, China}, pages = {429-436}, year = {2023}, month = nov, }

2022

- Impact Makes a Sound and Sound Makes an Impact: Sound Guides Representations and ExplorationsIn 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Oct 2022

Sound is one of the most informative and abundant modalities in the real world while being robust to sense without contacts by small and cheap sensors that can be placed on mobile devices. Although deep learning is capable of extracting information from multiple sensory inputs, there has been little use of sound for the control and learning of robotic actions. For unsupervised reinforcement learning, an agent is expected to actively collect experiences and jointly learn representations and policies in a self-supervised way. We build realistic robotic manipulation scenarios with physics-based sound simulation and propose the Intrinsic Sound Curiosity Module (ISCM). The ISCM provides feedback to a reinforcement learner to learn robust representations and to reward a more efficient exploration behavior. We perform experiments with sound enabled during pre-training and disabled during adaptation, and show that representations learned by ISCM outperform the ones by vision-only baselines and pre-trained policies can accelerate the learning process when applied to downstream tasks.

@inproceedings{Zhao22ImpactMakes, title = {Impact {{Makes}} a {{Sound}} and {{Sound Makes}} an {{Impact}}: {{Sound Guides Representations}} and {{Explorations}}}, url = {https://ieeexplore.ieee.org/abstract/document/9981510}, booktitle = {2022 {{IEEE}}/{{RSJ International Conference}} on {{Intelligent Robots}} and {{Systems}} ({{IROS 2022}})}, place = {Kyoto, Japan}, author = {Zhao, Xufeng and Weber, Cornelius and Hafez, Muhammad Burhan and Wermter, Stefan}, year = {2022}, month = oct, pages = {2512--2518}, publisher = {{IEEE}}, featured = {true}, }

2021

- Density Weighted Diversity Based Query Strategy for Active LearningTingting Wang, Xufeng Zhao, Qiujian Lv, Bo Hu, and Degang SunIn 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), May 2021

Deep learning has made remarkable achievements in various domains. Active learning, which aims to reduce the budget for training a machine-learning model, is especially useful for the Deep learning tasks with the demand of a large number of labeled samples. Unfortunately, our empirical study finds that many of the active learning heuristics are not effective when applied to Deep learning models in batch settings. To tackle these limitations, we propose a density weighted diversity based query strategy (DWDS), which makes use of the geometry of the samples. Within a limited labeling budget, DWDS enhances model performance by querying labels for the new training samples with the maximum informativeness and representativeness. Furthermore, we propose a beam-search based method to obtain a good approximation to the optimum of such samples. Our experiments show that DWDS outperforms existing algorithms in Deep learning tasks.

@inproceedings{Wang21DensityWeighted, title = {Density {{Weighted Diversity Based Query Strategy}} for {{Active Learning}}}, url = {https://ieeexplore.ieee.org/abstract/document/9437695}, booktitle = {2021 {{IEEE}} 24th {{International Conference}} on {{Computer Supported Cooperative Work}} in {{Design}} ({{CSCWD}})}, place = {Dalian, China}, author = {Wang, Tingting and Zhao, Xufeng and Lv, Qiujian and Hu, Bo and Sun, Degang}, year = {2021}, month = may, pages = {156--161}, doi = {10.1109/CSCWD49262.2021.9437695}, keywords = {active learning,Approximation algorithms,Classification algorithms,Deep learning,density,diversity,Geometry,informativeness,Linear programming,representativeness,Search problems,Training}, }

Journal Articles

2024

- TMLR

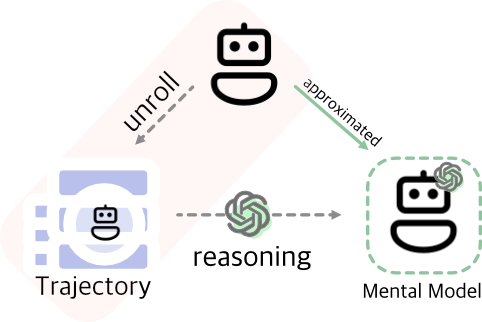

Mental Modeling of Reinforcement Learning Agents by Language ModelsTransactions on Machine Learning Research, Jun 2024

Mental Modeling of Reinforcement Learning Agents by Language ModelsTransactions on Machine Learning Research, Jun 2024Can emergent language models faithfully model the intelligence of decision-making agents? Though modern language models exhibit already some reasoning ability, and theoretically can potentially express any probable distribution over tokens, it remains underexplored how the world knowledge these pretrained models have memorized can be utilized to comprehend an agent’s behaviour in the physical world. This study empirically examines, for the first time, how well large language models (LLMs) can build a mental model of agents, termed agent mental modelling, by reasoning about an agent’s behaviour and its effect on states from agent interaction history. This research may unveil the potential of leveraging LLMs for elucidating RL agent behaviour, addressing a key challenge in eXplainable reinforcement learning (XRL). To this end, we propose specific evaluation metrics and test them on selected RL task datasets of varying complexity, reporting findings on agent mental model establishment. Our results disclose that LLMs are not yet capable of fully mental modelling agents through inference alone without further innovations. This work thus provides new insights into the capabilities and limitations of modern LLMs.

@article{Lu24MentalModeling, title = {Mental {{Modeling}} of {{Reinforcement Learning Agents}} by {{Language Models}}}, author = {Lu, Wenhao and Zhao, Xufeng and Spisak, Josua and Lee, Jae Hee and Wermter, Stefan}, year = {2024}, month = jun, url = {https://openreview.net/forum?id=JN7iNWaPTe}, urldate = {2024-06-27}, journal = {Transactions on Machine Learning Research}, }

2018

- JournalMulti-path clutter suppression in passive radar reference channel based on digital TV signalXufeng Zhao , Daojing Li, and Xuan HuJournal of University of Chinese Academy of Sciences, Jul 2018

Passive radar, which uses a third-party radiation source signal for mo...

@article{Zhao18MultipathClutter, title = {{Multi-path clutter suppression in passive radar reference channel based on digital TV signal}}, url = {http://journal.ucas.ac.cn/EN/abstract/abstract12593.shtml}, author = {Zhao, Xufeng and Li, Daojing and Hu, Xuan}, year = {2018}, month = jul, journal = {Journal of University of Chinese Academy of Sciences}, volume = {35}, number = {4}, pages = {529}, issn = {2095-6134}, doi = {10.7523/j.issn.2095-6134.2018.04.016}, langid = {cn}, bibtex_show = true, } - JournalImpact and Correction of Phase Error in Ladar Signal on Synthetic Aperture ImagingXuan Hu , Daojing Li, Tian He, and Xufeng ZhaoInfrared and Laser Engineering, Jul 2018

Specific to synthetic aperture ladar (SAL), the impact of signal phase error on synthetic aperture imaging was analyzed. Laser signal was modeled, the impact of laser signal coherence on SAL azimuthal resolution was analyzed, one solution by delaying the local oscillator signal was proposed. The impact of nonlinear distortion in LFM signal on range resolution was analyzed. To solve the problem of the random initial phase error introduced in the process of laser LFM signal modulation and amplification, one nonlinear distortion and phase error calibration correction method based on reference channel was proposed. Experiment and simulation results are shown.

@article{Hu18ImpactCorrection, title = {{Impact and Correction of Phase Error in Ladar Signal on Synthetic Aperture Imaging}}, url = {http://www.irla.cn/en/article/doi/10.3788/IRLA201847.0306001}, author = {Hu, Xuan and Li, Daojing and He, Tian and Zhao, Xufeng}, year = {2018}, journal = {Infrared and Laser Engineering}, volume = {47}, number = {3}, pages = {306001--0306001}, langid = {cn}, }

Theses

2018

- Master Thesis外辐射源雷达多径杂波抑制和多帧信号处理Xufeng ZhaoUniversity of Chinese Academy of Sciences (中国科学院大学), Jul 2018

@mastersthesis{ZhaoXuFeng18WaiFuSheYuanLeiDaDuoJingZaBoYiZhiHeDuoZhengXinHaoChuLi, author = {Zhao, Xufeng}, school = {University of Chinese Academy of Sciences (中国科学院大学)}, place = {Beijing, China}, title = {外辐射源雷达多径杂波抑制和多帧信号处理}, year = {2018}, url = {http://www.irgrid.ac.cn/handle/1471x/2327612?mode=full}, }

See also my Google Scholar Profile